With the constant growth of artificial intelligence, numerous algorithms and products are being introduced into the market on a daily basis, each with its unique functionality in various fields. Like software systems, AI systems also have their distinct lifecycle, and quality testing is a critical component of it. However, in contrast to software systems, where quality testing is focused on verifying the desired behavior generated by the logic, the primary objective in AI system quality testing is to ensure that the logic is in line with the expected behavior. Hence, the testing object in AI systems is the logic itself rather than the desired behavior. Also, unlike traditional software systems, AI systems necessitate careful consideration of tradeoffs, such as the delicate balance between overall accuracy and CPU usage.

Despite their differences, the seven principles of software testing outlined by ISTQB (International Software Testing Qualifications Board) can be easily applied to AI systems. This article will examine each principle in-depth and explore its correlation with the testing of AI systems. We will base this exposition on Krisp’s Voice Processing algorithms, which incorporate a family of pioneering technologies aimed at enhancing voice communication. Each of Krisp’s voice-related algorithms, based on machine learning paradigms, is amenable to the general testing principles we discuss below. However, for the sake of illustration and as our reference point, we will use Krisp’s Noise Cancellation technology (referred to as NC). This technology is based on a cutting-edge algorithm that operates on the device in real-time and is renowned for its ability to enhance the audio and video call experience by effectively eliminating disruptive background noises. The algorithm’s efficacy can be influenced by diverse factors, including usage conditions, environments, device specifications, and encountered scenarios. Rigorous testing of all these parameters becomes indispensable, accomplished by employing a diverse range of test datasets and conducting both subjective and objective evaluations. Therefore, it is crucial to test all these parameters, while keeping in mind the seven testing principles both,

1. Testing shows the presence of defects, not their absence

This principle underscores that despite the effort invested in finding and fixing errors in software, it cannot guarantee that the product will be entirely defect-free. Even after comprehensive testing, defects can still persist in the system. Hence, it is crucial to continually test and refine the system to minimize the risk of defects that may cause harm to users or the organization.

This is particularly significant in the context of AI systems, as AI algorithms are in general not expected to achieve 100% accuracy. For instance, testing may reveal that an underlying AI-based algorithm, such as the NC algorithm, has issues when canceling out white noises. Even after addressing the problem for observed scenarios and improving accuracy, there is no guarantee that the issue will never resurface in other environments and be detected by users. Testing only serves to reduce the likelihood of such occurrences.

2. Exhaustive testing is impossible

The second testing principle highlights that achieving complete test coverage by testing every possible combination of inputs, preconditions, and environmental factors is not feasible. This challenge is even more profound in AI systems since the number of possible combinations that the algorithm needs to cover is often infinite.

For instance, in the case of the NC algorithm, testing every microphone device, in every condition, with every existing noise type would be an endless endeavor. Therefore, it is essential to prioritize testing based on risk analysis and business objectives. For example, testing should focus on ensuring that the algorithm does not cause voice cutouts with the most popular office microphone devices in typical office conditions.

By prioritizing testing activities based on identified risks and critical functionalities, testing efforts can be optimized to ensure that the most important defects are detected and addressed first. This approach helps to balance the need for thorough testing with the practical realities of finite time, resources, and costs associated with testing.

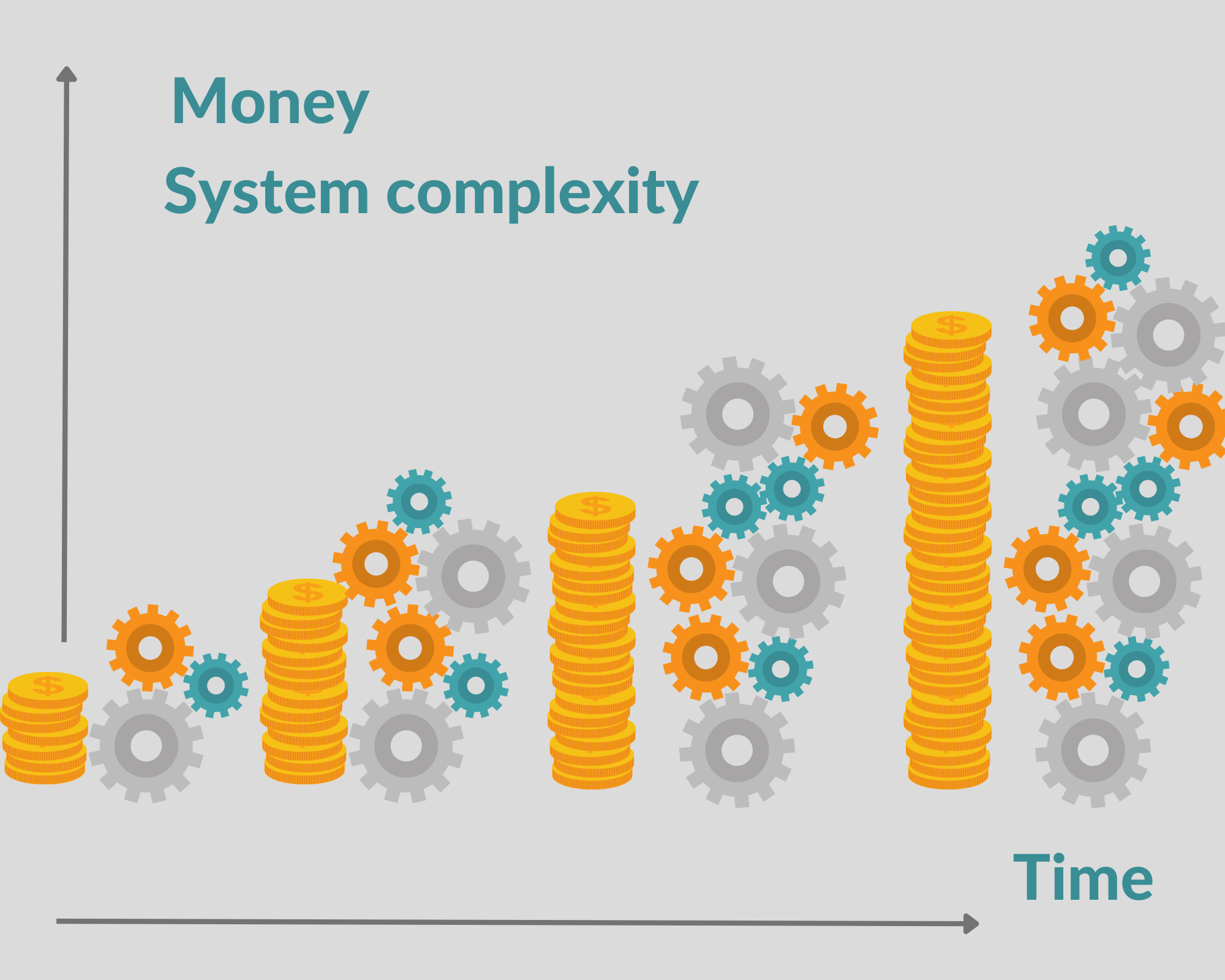

3. Early testing saves time and money

This principle emphasizes the importance of conducting testing activities as early as possible in the development lifecycle, including AI products. Early testing is a cost-effective approach to identifying and resolving defects in the initial phases, reducing expenses and time, whereas detecting errors at the last minute can be the most expensive to rectify.

Ideally, testing should start during the algorithm’s research phase, way before it becomes an actual product. This can help to identify possible undesirable behavior and prevent it from propagating to the later stages. This is especially crucial since the AI algorithm training process can take weeks if not months. Detecting errors in the late stages can lead to significant release delays, impacting the project’s timeline and budget.

Testing the algorithm’s initial experimental version can help to identify stability concerns and reveal any limitations that require further refinement. Furthermore, early testing helps to validate whether the selected solution and research direction are appropriate for addressing the problem at hand. Additionally, analyzing and detecting patterns in the algorithm’s behavior assists in gaining insights into the research trajectory and potential issues that may arise.

In the context of the NC project, early testing can help to evaluate whether the algorithm works steadily when subjected to different scenarios, such as retaining considerable noise after a prolonged period of silence. Finding and addressing such patterns at an early stage ensures smoother and more efficient resolution.

4. Defects cluster together

The principle of defect clustering suggests that software defects and errors are not distributed randomly but instead tend to cluster or aggregate in certain areas or components of the system. This means that if multiple errors are found in a specific area of the software, it is highly likely that there are more defects lurking in that same region. This principle also applies to AI systems, where the discovery of logically undesired behavior is a red flag that future tests will likely uncover similar issues.

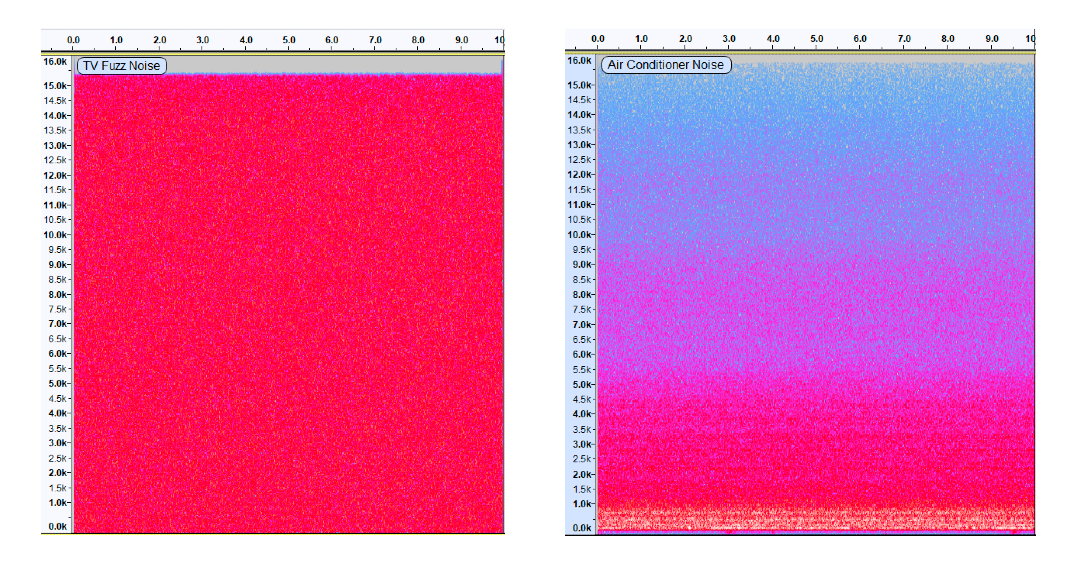

For example, suppose a noise cancellation algorithm struggles to cancel TV fuzz noise when presented alongside speech. In that case, further tests may reveal the same problem when the algorithm is faced with background noise from an air conditioner. Although both noises may seem alike, careful observation of the frequency visual representation (Spectrogram) clearly highlights the noticeable distinction.

TV Fuzz Noise

AC Noise

Credits: https://www.youtube.com/

Identifying high-risk areas that could potentially damage user experience is another way that the principle of defect clustering can be beneficial. During the testing process, these areas can be given top priority. Using the same noise cancellation algorithm as an example, the highest-risk area is the user’s speech quality, and voice-cutting issues are considered the most critical defects. If the algorithm exhibits voice-cutting behavior in one scenario, it is possible that it may also cause voice degradation in other scenarios.

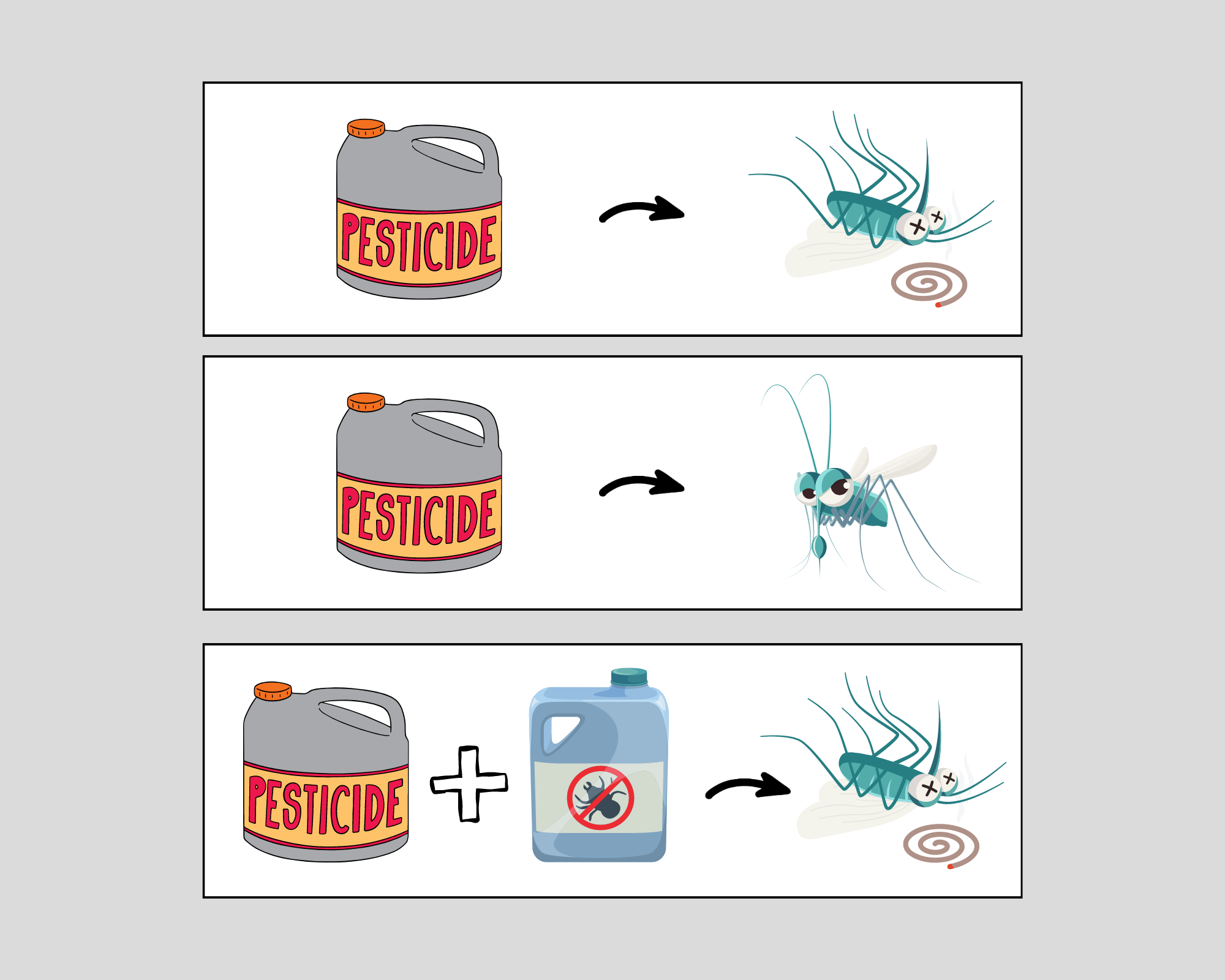

5. Beware of the pesticide paradox

The pesticide paradox is a well-known phenomenon in pest control, where applying the same pesticide to pests can lead to immunity and resistance. The same principle applies to software and AI testing, where the “pesticide” is testing, and the “pests” are software bugs. Running the same set of tests repeatedly may lead to the diminishing effectiveness of those tests as both AI and software systems adapt and become immune to them.

To avoid the pesticide paradox in software and AI testing, it’s essential to use a range of testing techniques and tools and to update tests regularly. When existing test cases no longer reveal new defects, it’s time to implement new cases or incorporate new techniques and tools.

Similarly, AI systems must be constantly monitored for the pesticide paradox, known as “overfitting.” Although practically an AI system can never be entirely free of bugs, it’s essential to update test datasets and cases, and possibly introduce new metrics or tools, once the system can easily handle existing tests.

Take the example of a noise cancellation algorithm that initially worked almost flawlessly on audios with low-intensity noise. To enhance the algorithm’s quality and discover new bugs, new datasets were created, featuring higher-intensity noises that reached speech loudness.

By avoiding the pesticide paradox in both software and AI testing, we can protect our systems and ensure they remain effective in their respective fields.

6. Testing is context dependent

Testing methods must be tailored to the specific context in which they will be implemented. This means that testing is always context-dependent, with each software or AI system requiring unique testing approaches, techniques, and tools.

In the realm of AI, the context includes a range of factors, such as the system’s purpose, the characteristics of the user base, whether the system operates in real-time or not, and the technologies utilized.

Context is of paramount importance when it comes to developing an effective testing strategy, techniques, and tools for the NC algorithms. Part of this context involves understanding the needs of the users, which can assist in selecting appropriate testing tools such as microphone devices. As a result, an understanding of context enables the reproduction and testing of users’ real-life scenarios.

By considering context, it becomes possible to create better-designed tests that accurately represent actual use cases, leading to more reliable and accurate results. Ultimately, this approach can ensure that testing is more closely aligned with real-world situations, increasing the overall effectiveness and utility of the NC algorithm.

7. Absence-of-errors is a fallacy

The notion that the absence of errors can be achieved through complete test coverage is a fallacy. In some cases, there may be unrealistic expectations that every possible test should be run and every potential case should be checked. However, the principles of testing remind us that achieving complete test coverage is practically impossible. Moreover, it is incorrect to assume that complete test coverage guarantees the success of the system. For example, the system may function flawlessly but still be difficult to use and fail to meet the needs and requirements of users from the product perspective. This also applies to AI systems as well.

Consider, for instance, the NC algorithm, which may have excellent quality, cancel out noise and maintain speech clarity, making it virtually free of defects. However, it being too complex and large, may render it useless as a product.

Conclusion

To sum up, AI systems have unique lifecycles and require a different approach to testing from traditional software systems. Nevertheless, the seven principles of software testing outlined by the ISTQB can be applied to AI systems. Applying these principles can help identify critical defects, prioritize testing efforts, and optimize testing activities to ensure that the most important defects are detected and addressed first. All Krisp’s algorithms have demonstrated that adhering to these rules leads to improved accuracy, higher quality, and increased reliability and safety of the AI system in various fields.

Try next-level audio and voice technologies

Krisp licenses its SDKs to embed directly into applications and devices. Learn more about Krisp’s SDKs and begin your evaluation today.

References

The article is written by:

- Tatevik Yeghiazaryan, BSc in Software Engineering, Senior QA Engineer